I have the opposite with flooded. One day it's at 12.7v, then next it is at 5.I went back to a standard flooded lead acid.

What I have experienced is, AGM tend to instantly go from good to bad. Almost like one cell just dies. More of a chance of getting stranded. Flooded lead acid tend to get weak at the end of their life. The vehicle starts cranking slower. A good warning sign to replace.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Anyone good at tracking down parasitic drains?

- Thread starter twentyfooteighty

- Start date

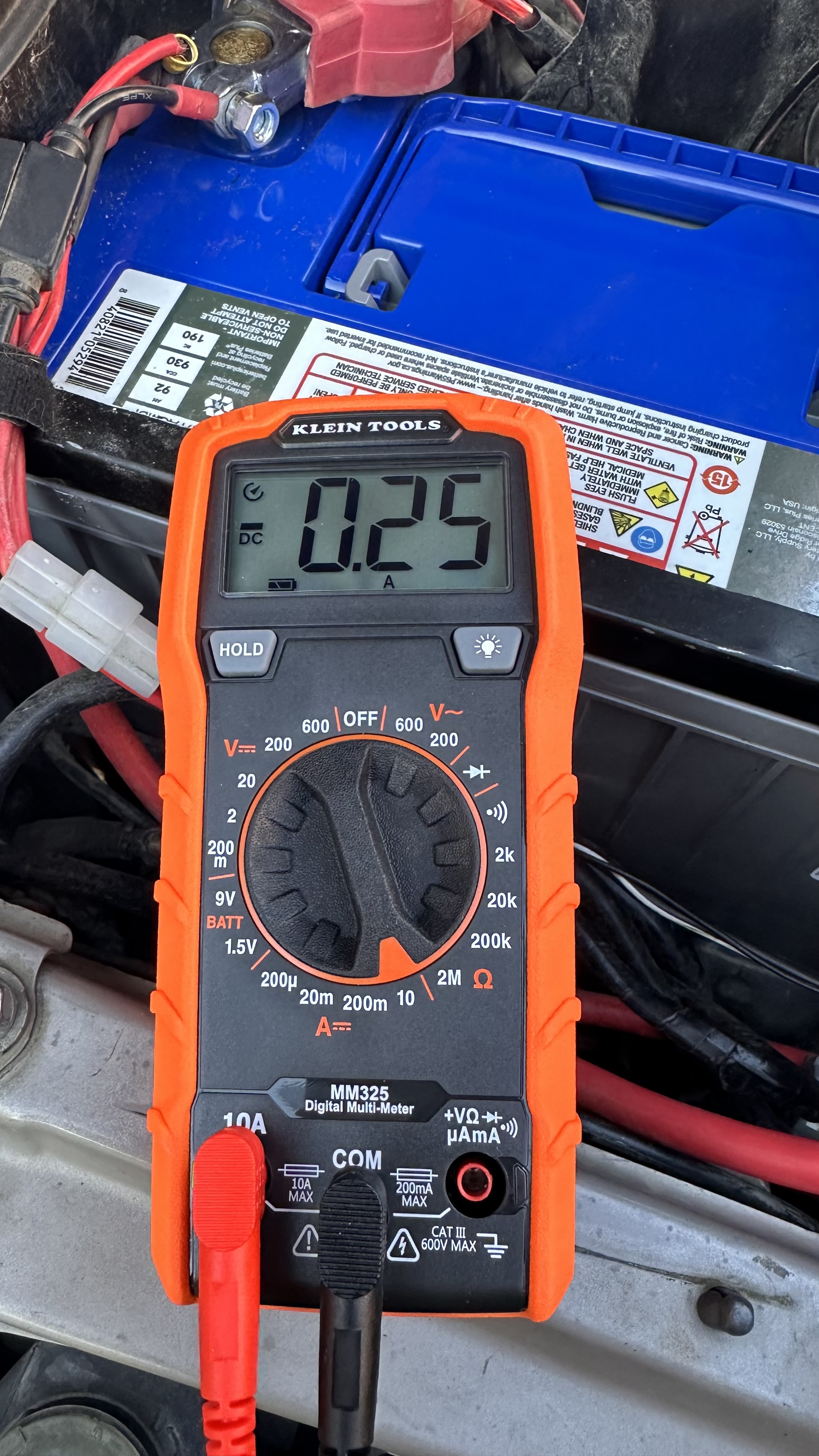

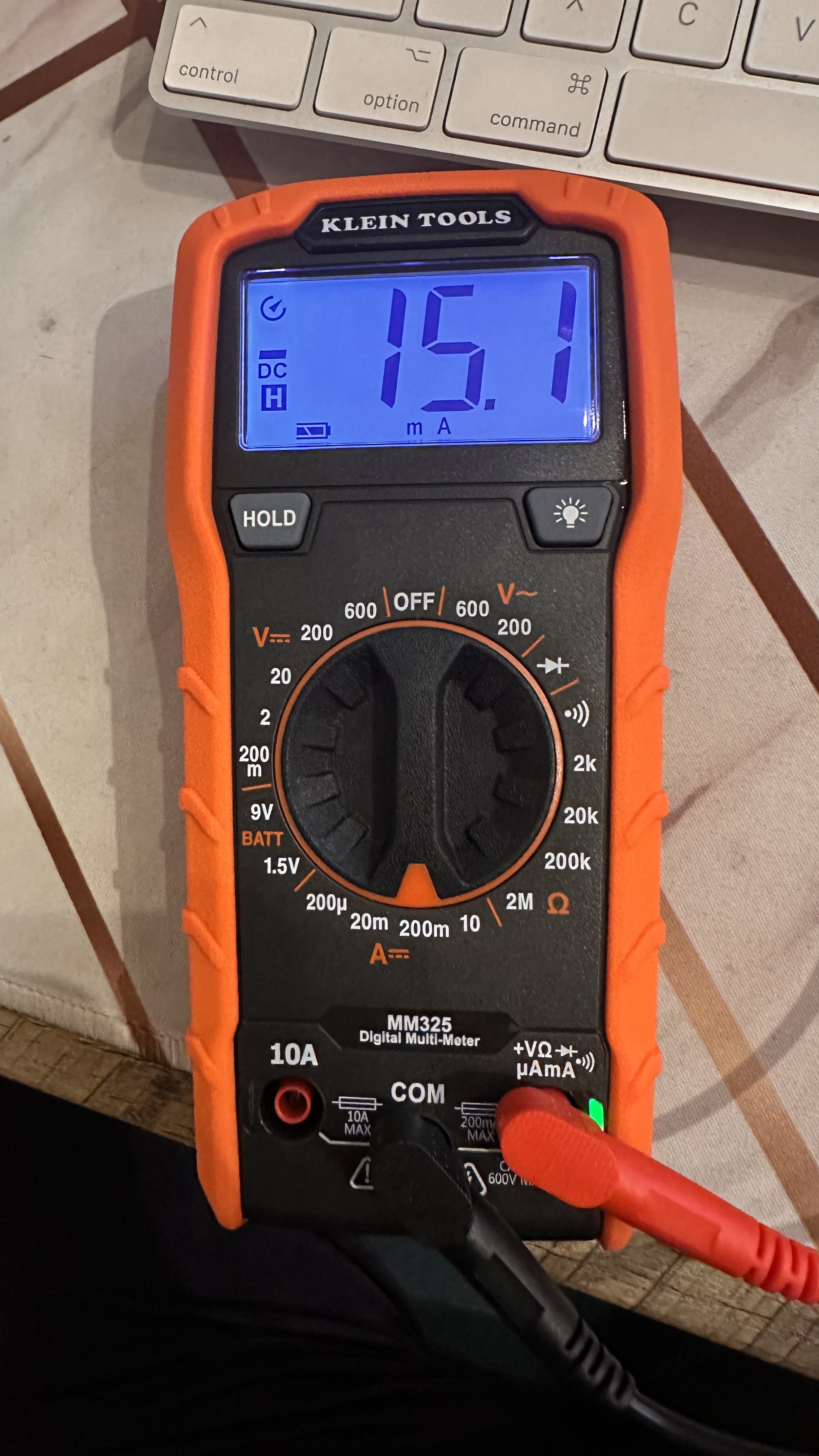

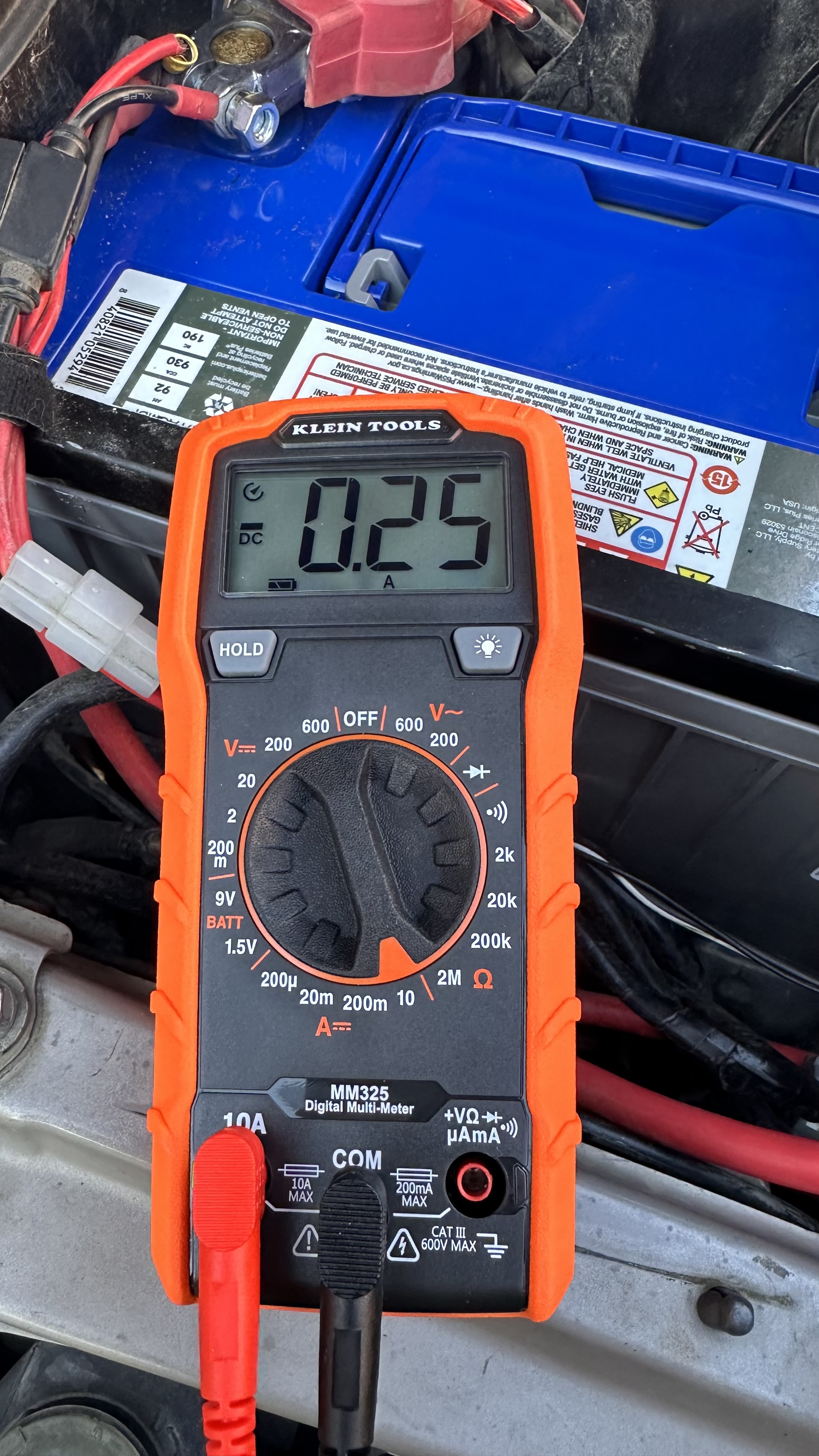

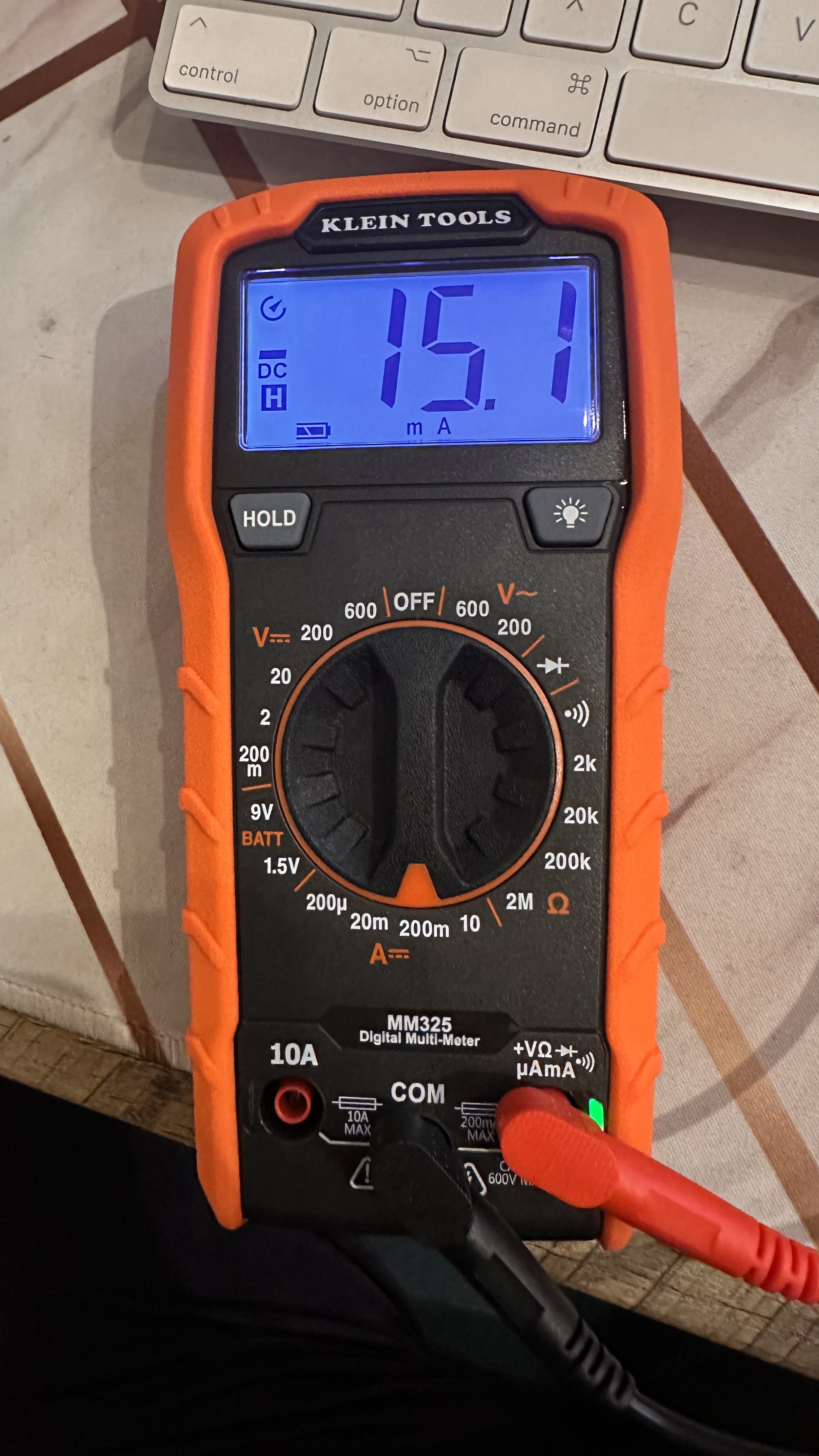

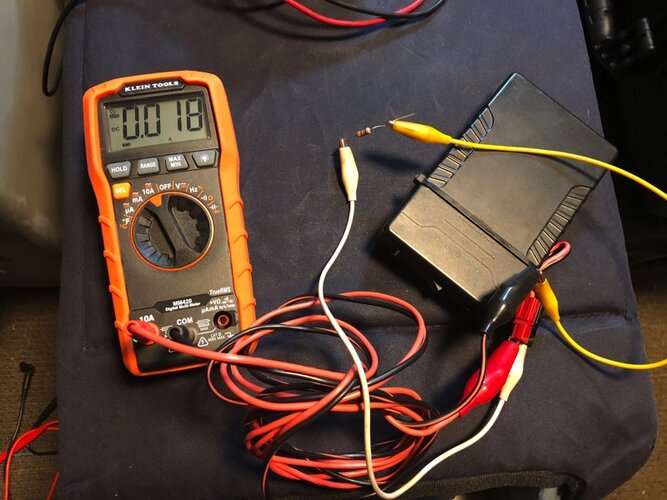

Welp, I am at a loss. I took readings at 10A then went to 200mA. Inot sure if this means 250mA or 15mA. Either I am good, or I have a hell of a draw.

DaveInDenver

Rising Sun Ham Guru

What's the connection to the battery?Welp, I am at a loss. I took readings at 10A then went to 200mA. Inot sure if this means 250mA or 15mA. Either I am good, or I have a hell of a draw.

All you did was move the red lead from "10A" to "VΩ" and nothing else?

Your actual current is closer to 15.1mA than 250mA.

To understand why requires being an EE...

If you're reading this, then OK.

The 10A scale in this meter apparently doesn't read very low values correctly. It's specified to be ±(2% reading + 5 counts) with 10mA resolution, which means at full scale the error could be as much as 265mA in error. Since that's about what it's showing for a very low actual current means it's just trying to display at it's lower limit when it sees any current flow. It would probably show "0.25" until you got higher than ~375mA on 10A (normally you'd expect it to say zero below its minimum resolution). At that point readings would I think fall into the 2% give-or-take accuracy.

Edit. Now that I think about it I notice you may have the meter's polarity reversed. That could trip up the ADC into rolling over. If you reverse leads I suspect it may fall into line. On 10A it might show 10mA up to as high as 30mA, depends on how the error is handled.

When you switch to the 200mA range the accuracy is ±(1% reading + 5 counts) with 100μA (0.1mA) resolution, so indicating 15.1mA means with uncertainty it may be around 255μA (0.255mA) either way from that.

To go any further would require explanation of analog-to-digital conversion, e.g. ADC bit-width (this appears to be a 10-bit ADC), signal-to-noise, sampling, binary math and display issues. Suffice to say this meter's guts are not exactly pushing any performance envelopes.

Last edited:

What's the connection to the battery?

All you did was move the red lead from "10A" to "VΩ" and nothing else?

Inline connection to battery - negative battery terminal removed, red lead to battery post, black to cable terminal. Modes and positions on the meter as shown.

DaveInDenver

Rising Sun Ham Guru

"Correct" circuit would be:

battery (+) -> red battery cable(s) still connected -> truck stuff -> black battery cable(s) removed -> DMM positive lead -> DMM stuff -> DMM COM lead -> battery (-)

Current will physically flow either way through the DMM sensor (it's just a resistor in series with a fuse) but it wants to see direction of flow into positive and back out common to understand the minus sign. Conventional current flow is said to come out from positive of a battery then goes into load and return back to negative of battery.

It's only important in a pedantic way. You actually have ~15mA of parasitic it seems in any case.

battery (+) -> red battery cable(s) still connected -> truck stuff -> black battery cable(s) removed -> DMM positive lead -> DMM stuff -> DMM COM lead -> battery (-)

Current will physically flow either way through the DMM sensor (it's just a resistor in series with a fuse) but it wants to see direction of flow into positive and back out common to understand the minus sign. Conventional current flow is said to come out from positive of a battery then goes into load and return back to negative of battery.

It's only important in a pedantic way. You actually have ~15mA of parasitic it seems in any case.

I am not sure that I follow all those arrows. Are you saying I have the leads from the DMM backwards?

This is the process that I used to test.

www.innova.com

www.innova.com

How to test for a Parasitic Draw

Sometimes, a battery may experience significant drain long after the engine has been shut off. It might not be a faulty battery causing this.

DaveInDenver

Rising Sun Ham Guru

I know. I was trying to find a schematic to illustrate. I'll update for later reference but it really isn't important to your troubleshooting.I am not sure that I follow all those arrows. Are you saying I have the leads from the DMM backwards?

Per your link, this step is wrong. Red and black are reversed for proper polarity. Black (COM) to battery negative to get the negative sign on the DMM right.

It's only important in a pedantic way. You actually have ~15mA of parasitic it seems in any case.

15mA is pretty low. I guess I don't have a drain. I have no idea what is killing my batteries then.

DaveInDenver

Rising Sun Ham Guru

Yes, if 15mA is true that's right in line with what you'd expect.15mA is pretty low. I guess I don't have a drain. I have no idea what is killing my batteries then.

You could check on 20mA range and try getting polarity right to see if 10A range comes into agreement, but I don't see anything so far that would suggest 15mA isn't right.

I think you're chasing an under charged X2 that is jumping up to bite you due to cold weather. I never could get Odysseys to stay happy longer than 2 years in my Tacoma without a lot of care and feeding with regular external charging.

Yes, if 15mA is true that's right in line with what you'd expect.

You could check on 20mA range and try getting polarity right to see if 10A range comes into agreement, but I don't see anything so far that would suggest 15mA isn't right.

I think you're chasing an under charged X2 that is jumping up to bite you due to cold weather.

The X2 is brand new, and I trickled it before I put it in. I just noticed that it was draining faster than I had hoped.

My concerns started with the 2 cratered Interstate floode batteries from Costco in the last year. My initial thought was parasitic draw, but now i am starting think that maybe something else (alternator) is what is killing them???

DaveInDenver

Rising Sun Ham Guru

Have you checked what the voltage is while running? Over charging or AC (ripple) is just as damaging and to have them go bad that quick could point to a failed diode in the alternator, broken wire in harness (like ALT_S, which is the voltage sense for the alternator) or the voltage regulator.The X2 is brand new, and I trickled it before I put it in. I just noticed that it was draining faster than I had hoped.

My concerns started with the 2 cratered Interstate floode batteries from Costco in the last year. My initial thought was parasitic draw, but now i am starting think that maybe something else (alternator) is what is killing them???

BTW, I'm not suggesting you should abandon the excessive parasitic. I'd still try to get two measurements that agree to confirm. A second meter ideally but at least two ranges that agree basically on magnitude and polarity.

I have an Ultraguage. It shows when I first start that I am around 14.6-14.7, then tapers off to 14.2 after a few moments. I've seen it go as low as 13.8 from time to time.

BTW, I'm not suggesting you should abandon the excessive parasitic. I'd still try to get two measurements that agree to confirm. A second meter ideally but at least two ranges that agree basically on magnitude and polarity.

I have a second DMM, but the 200mA fuse popped. I have some fuses on order, and can double-check with that one once they arrive.

DaveInDenver

Rising Sun Ham Guru

Those make sense, typical of most Toyota trucks from roughly 2015 and earlier.I have an Ultraguage. It shows when I first start that I am around 14.6-14.7, then tapers off to 14.2 after a few moments. I've seen it go as low as 13.8 from time to time.

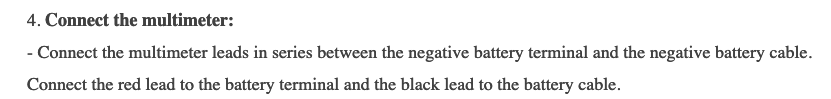

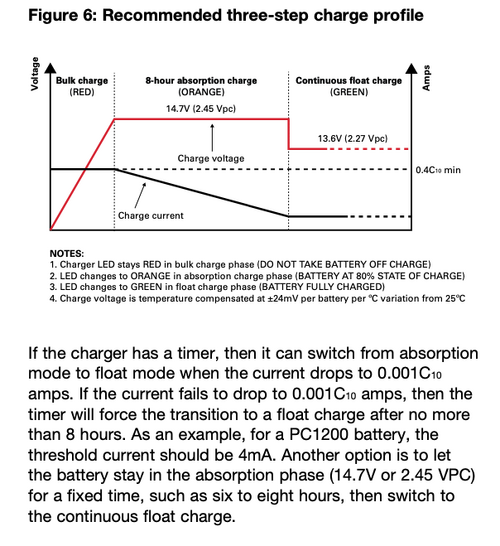

But that's also why I mention it's low for Odyssey/X2. They need ~14.7 (up to an absolute maximum of 15.0V) at 25°C consistently for as much as 8 hours during absorption to fully charge.

DaveInDenver

Rising Sun Ham Guru

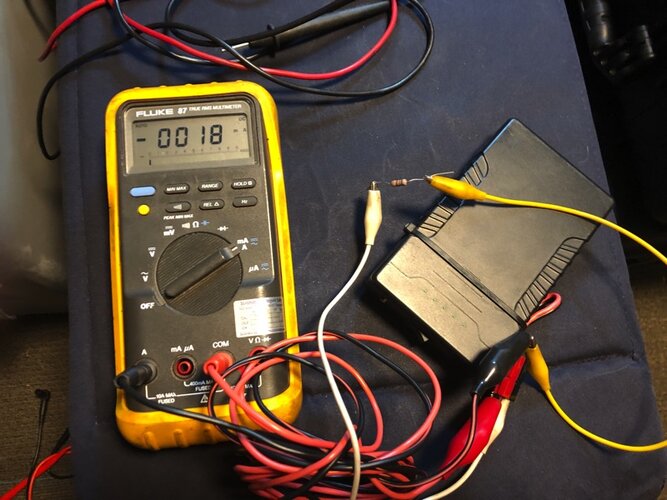

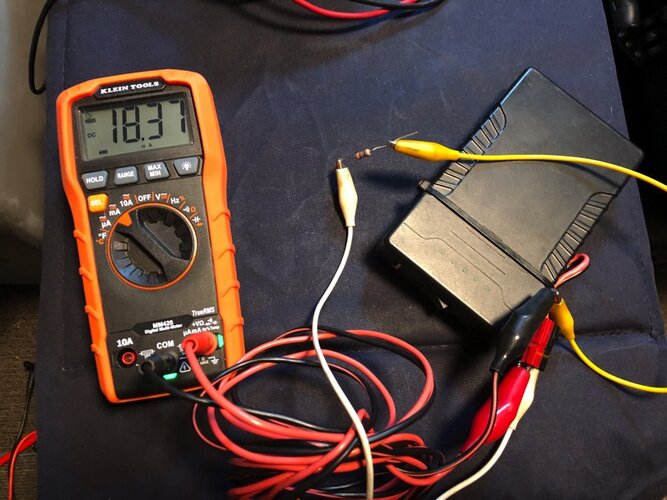

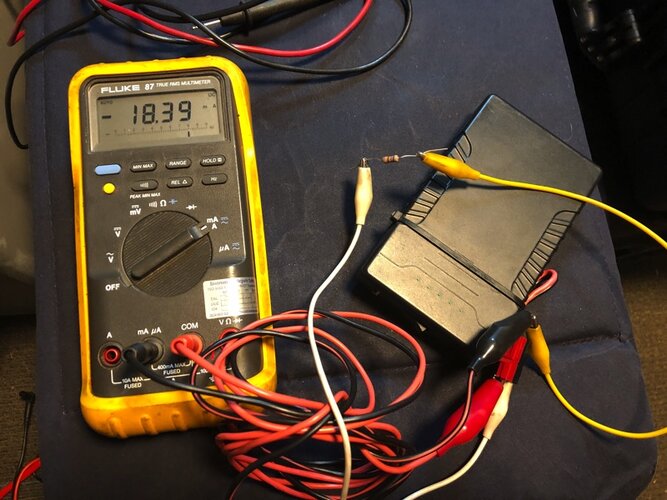

You got me wondering @damon about those Klein meters. I have a MM420 that I carry in the truck. This carries the same specifications for accuracy and resolution as yours. Adds a few more features but I suspect is going to be similar on the core.

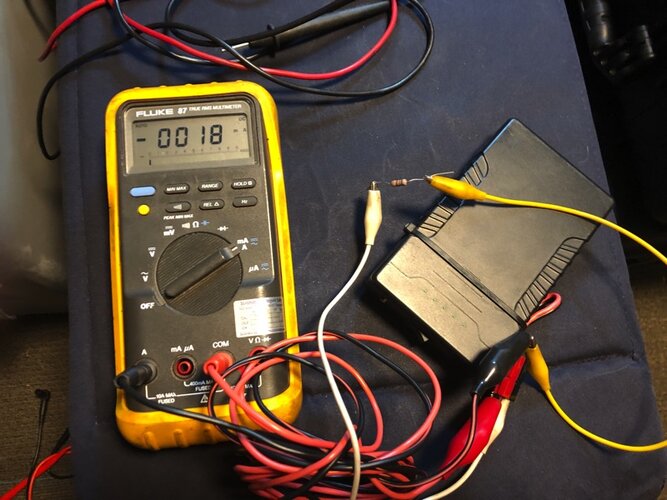

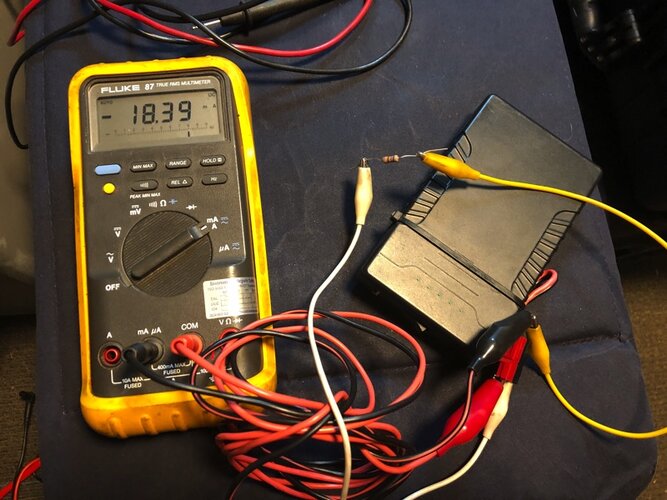

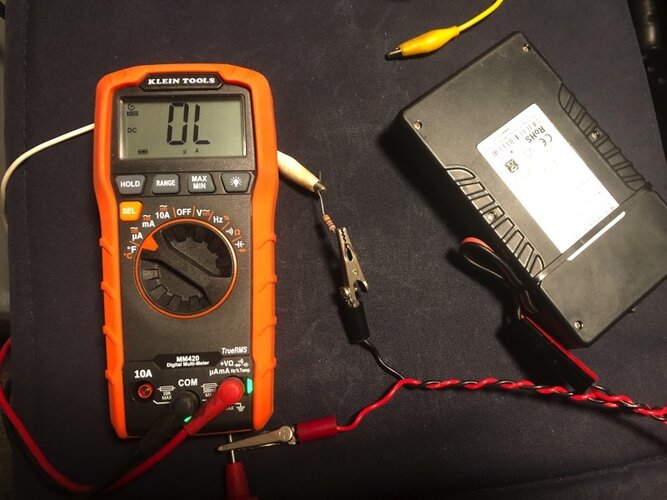

Compared it against a Fluke 87 Series I.

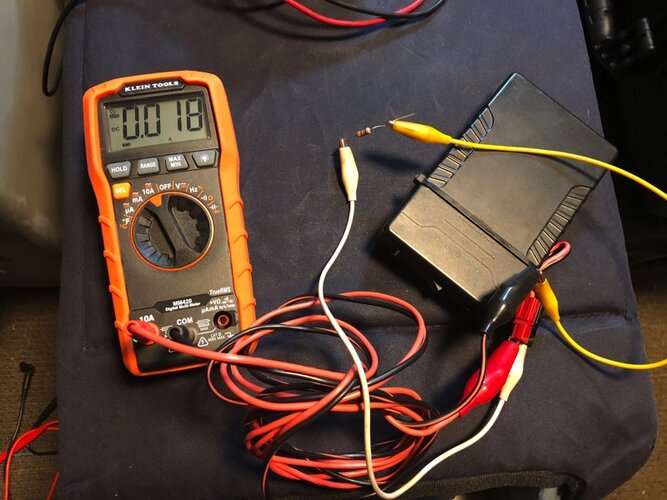

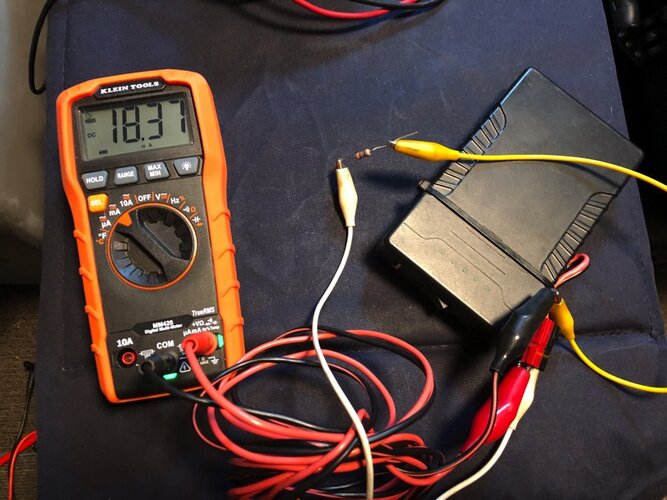

This is just a 12V pack (measures 12.4V) feeding a 680Ω resistor, so should be 18.24mA on paper. Both agree very well within all the tolerances and limits of this junk box stuff. I also flipped polarity on them which did not confuse the Fluke (which I knew didn't care) nor the Klein. So that speculation on my part is completely bogus.

I see no reason to suspect your DMM isn't accurate, mine at least seems like a completely fine meter.

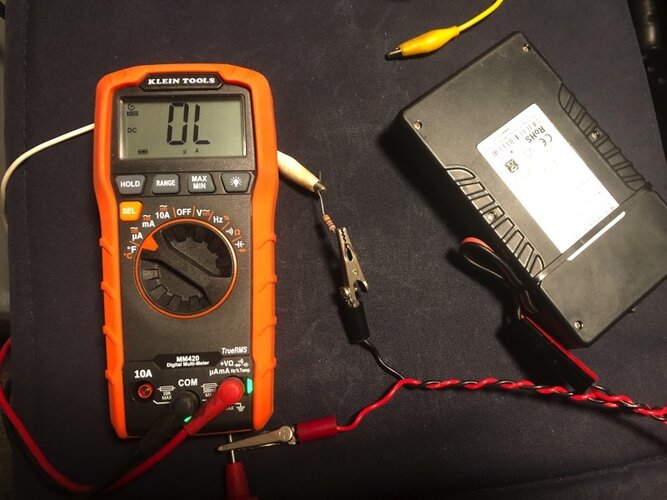

Using 10A ranges.

Using mA ranges.

And it later occurred to me that "What if the current is actually 250mA, what might the meter show on the 200mA range?" It should recognize that and as long as you don't blow the fuse should display a fault. My Fluke gives an "OL" in this case for overload.

There are fuses in these current circuits. Mine have 440mA or 1A fast-blo and I assume yours will have one, too, although I don't know for sure what it's rated. It could be smaller to match the ranges.

So my Klein when I set it to μA range with that 18.37mA (18,370 μA) load on a 4000 μA range goes to OL, too. So yep, handles that correctly.

Compared it against a Fluke 87 Series I.

This is just a 12V pack (measures 12.4V) feeding a 680Ω resistor, so should be 18.24mA on paper. Both agree very well within all the tolerances and limits of this junk box stuff. I also flipped polarity on them which did not confuse the Fluke (which I knew didn't care) nor the Klein. So that speculation on my part is completely bogus.

I see no reason to suspect your DMM isn't accurate, mine at least seems like a completely fine meter.

Using 10A ranges.

Using mA ranges.

And it later occurred to me that "What if the current is actually 250mA, what might the meter show on the 200mA range?" It should recognize that and as long as you don't blow the fuse should display a fault. My Fluke gives an "OL" in this case for overload.

There are fuses in these current circuits. Mine have 440mA or 1A fast-blo and I assume yours will have one, too, although I don't know for sure what it's rated. It could be smaller to match the ranges.

So my Klein when I set it to μA range with that 18.37mA (18,370 μA) load on a 4000 μA range goes to OL, too. So yep, handles that correctly.

Last edited:

Inukshuk

Rising Sun Member

Guess we'll do your Jag Alt and see what happens.

I'm a few years in with an Odyssey in my 80 and though I have it on a charger a lot while it sits in the garage, in general I notice no battery performance issues,

I'm a few years in with an Odyssey in my 80 and though I have it on a charger a lot while it sits in the garage, in general I notice no battery performance issues,

60wag

Rising Sun Member

- Joined

- Aug 23, 2005

- Messages

- 2,646

I think I got my slow battery drain resolved. It's in the circuit that controls the ring light around the ignition key. I found a thread on MUD that describes the issue in detail. The short fix is to unplug the green relay behind the passenger side kick panel. The ring light doesn't work any more but the battery is holding voltage better than it has in several years. This is for a 1996 80 series, not sure if other model trucks are wired the same.

My 1995 does the same thing. The ring light is eternally on.